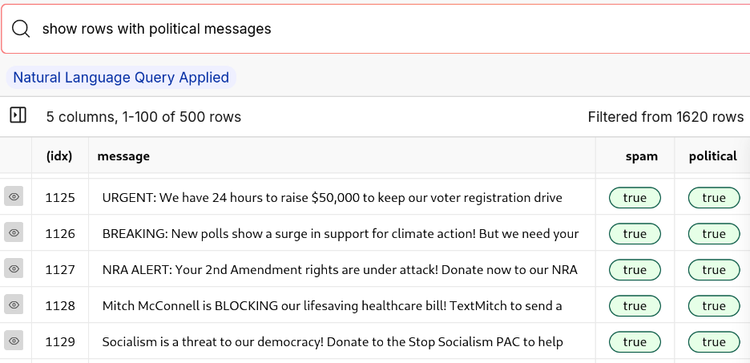

How Well Can Llama 3.1 8B Detect Political Spam? [4/4]

It only took about 11 minutes to fine-tuned Llama 3.1 8B on our political spam synthetic dataset using ReFT.

Fine-Tuning Llama 3.1 8B in Under 12 Minutes [3/4]

Meta has recently released Llama 3.1, including their 405 billion parameter model which is the most capable open model

How to De-duplicate and Clean Synthetic Data [2/4]

Synthetic data has shown promising results for training and fine tuning large models, such as Llama 3.1 and the

Create Your Own Synthetic Data With Only 5 Political Spam Texts [1/4]

With the 2024 elections coming up, spam and political texts are more prevalent than ever as political campaigns increasingly turn

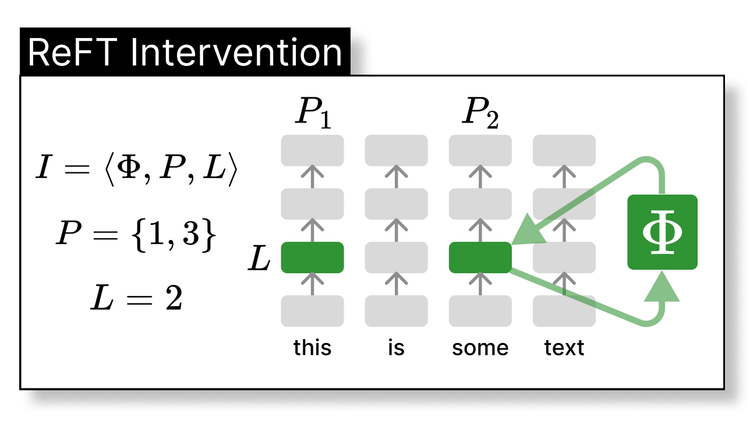

Fine-tuning Llama 3 in 14 minutes using ReFT

If you have been fine-tuning models recently, you have most likely used LoRA. While LoRA has been the dominant PEFT

![How Well Can Llama 3.1 8B Detect Political Spam? [4/4]](/content/images/size/w750/2024/09/DALL-E-2024-09-13-20.39.28---A-stylized--futuristic-llama-holding-an-American-flag-in-its-mouth--with-sharp--bold-lines-and-glowing--laser-like-eyes.-The-llama-has-a-sleek-fur-tex-1.webp)

![Fine-Tuning Llama 3.1 8B in Under 12 Minutes [3/4]](/content/images/size/w750/2024/09/DALL-E-2024-09-03-11.22.45---A-stylized-llama-with-vibrant-red-and-blue-fur--shooting-laser-beams-from-its-eyes.-The-llama-s-eyes-glow-intensely--with-one-eye-emitting-a-red-laser-copy.jpg)

![How to De-duplicate and Clean Synthetic Data [2/4]](/content/images/size/w750/2024/08/blog-copy.jpg)