arXiv Dive: How Flux and Rectified Flow Transformers Work

Flux made quite a splash with its release on August 1st, 2024 as the new state of the art generative

arXiv Dive: How Meta Trained Llama 3.1

Llama 3.1 is a set of Open Weights Foundation models released by Meta, which marks the first time an

ArXiv Dives:💃 Samba: Simple Hybrid State Space Models for Efficient Unlimited Context Language Modeling

Modeling sequences with infinite context length is one of the dreams of Large Language models. Some LLMs such as Transformers

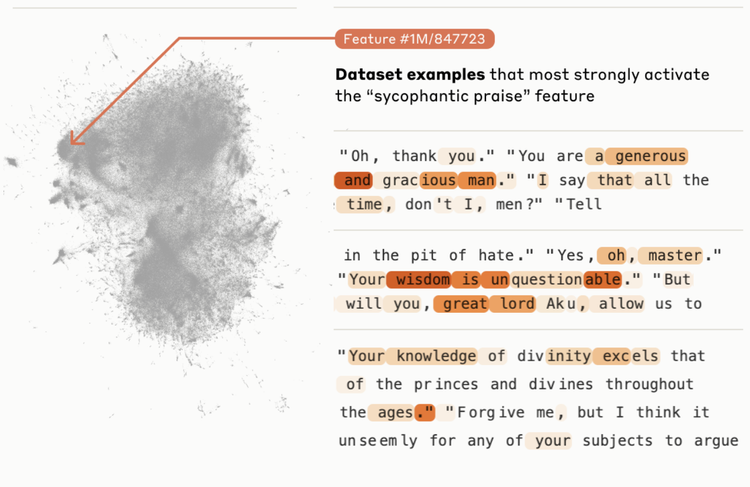

ArXiv Dives: Scaling Monosemanticity: Extracting Interpretable Features from Claude 3 Sonnet

The ability to interpret and steer large language models is an important topic as they become more and more a