How to Fine-Tune a FLUX.1-dev LoRA with Code, Step by Step

FLUX.1-dev is one of the most popular open-weight models available today. Developed by Black Forest Labs, it has 12

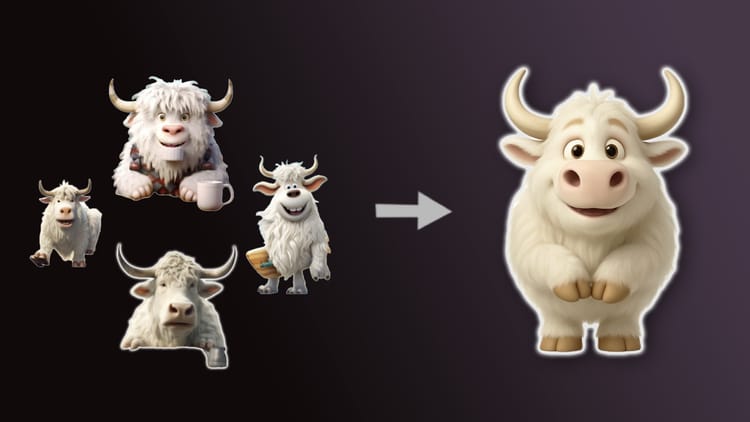

How to Fine-Tune PixArt to Generate a Consistent Character

Can we fine-tune a small diffusion transformer (DiT) to generate OpenAI-level images by distilling off of OpenAI images? The end

How to Fine-Tune Qwen3 on Text2SQL to GPT-4o level performance

Welcome to a new series from the Oxen.ai Herd called Fine-Tuning Fridays! Each week we will take an open

Fine-Tuning Fridays

Welcome to a new series from the Oxen.ai Herd called Fine-Tuning Fridays! Each week we will take an open

Training a Rust 1.5B Coder LM with Reinforcement Learning (GRPO)

Group Relative Policy Optimization (GRPO) has proven to be a useful algorithm for training LLMs to reason and improve on