Training a Rust 1.5B Coder LM with Reinforcement Learning (GRPO)

Group Relative Policy Optimization (GRPO) has proven to be a useful algorithm for training LLMs to reason and improve on

🧠 GRPO VRAM Requirements For the GPU Poor

Since the release of DeepSeek-R1, Group Relative Policy Optimization (GRPO) has become the talk of the town for Reinforcement Learning

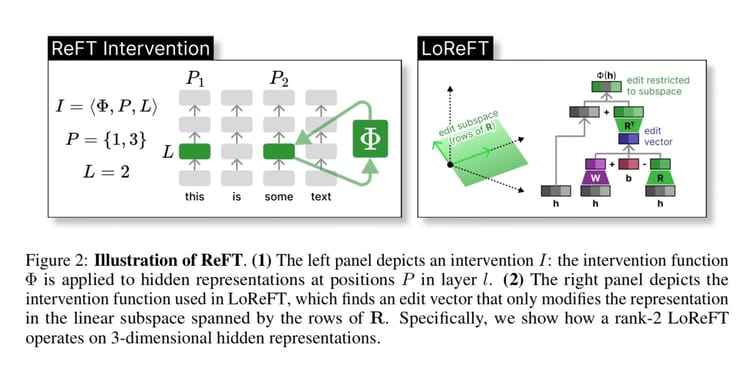

ArXiv Dives: How ReFT works

ArXiv Dives is a series of live meetups that take place on Fridays with the Oxen.ai community. We believe

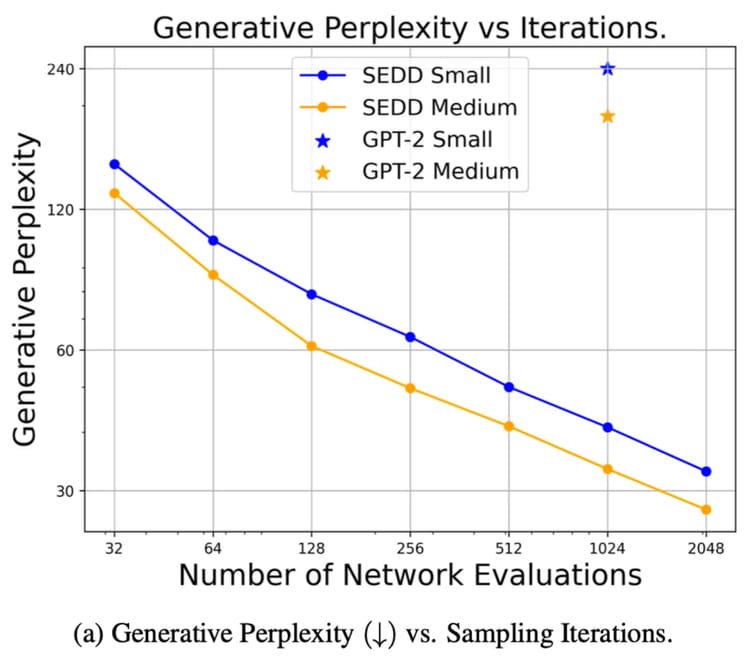

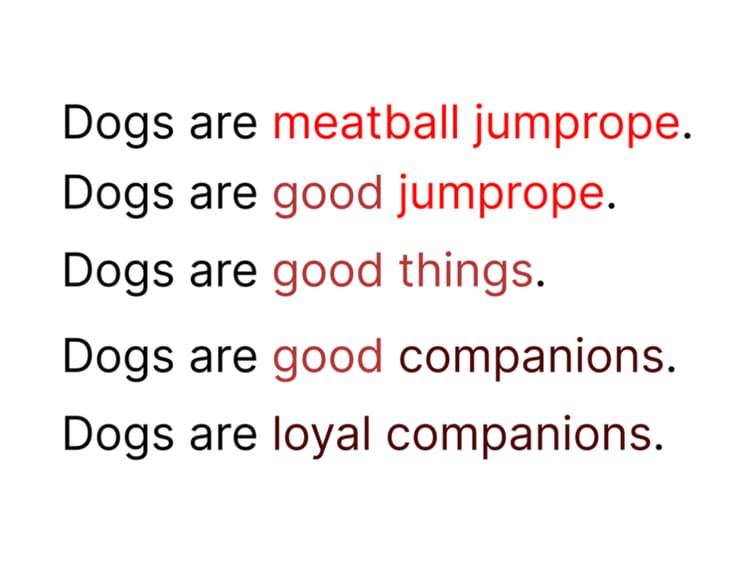

How to Train Diffusion for Text from Scratch

This is part two of a series on Diffusion for Text with Score Entropy Discrete Diffusion (SEDD) models. Today we

ArXiv Dives: Text Diffusion with SEDD

Diffusion models have been popular for computer vision tasks. Recently models such as Sora show how you can apply Diffusion

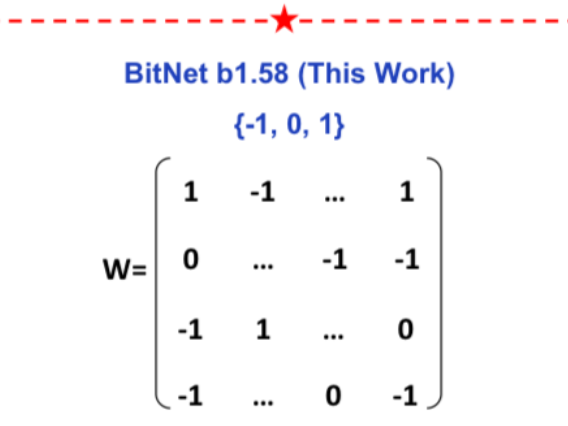

ArXiv Dives: The Era of 1-bit LLMs, All Large Language Models are in 1.58 Bits

This paper presents BitNet b1.58 where every weight in a Transformer can be represented as a {-1, 0, 1}

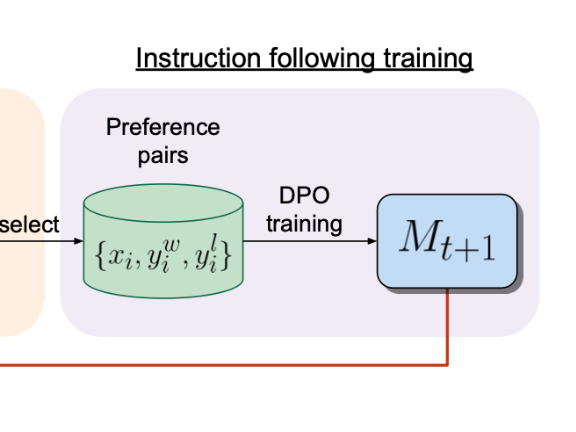

How to train Mistral 7B as a "Self-Rewarding Language Model"

About a month ago we went over the "Self-Rewarding Language Models" paper by the team at Meta AI

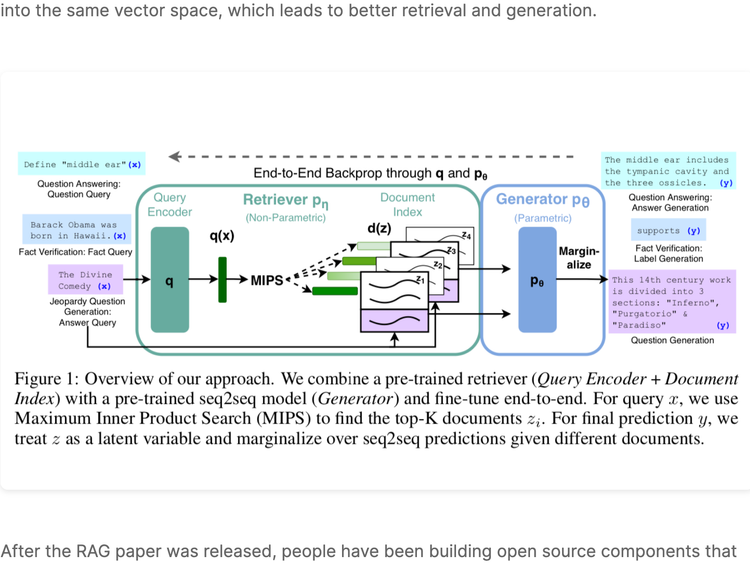

Practical ML Dive - Building RAG from Open Source Pt 1

RAG was introduced by the Facebook AI Research (FAIR) team in May of 2020 as an end-to-end way to include

Practical ML Dive - How to train Mamba for Question Answering

What is Mamba 🐍?

There is a lot of hype about Mamba being a fast alternative to the Transformer architecture. The