Latest

Feb

20

Frank's Red Hot

0:00

/0:45

1×

An AI generated goat rapping alongside Ludacris in Frank's RedHot's "

Feb

20

Isometric.nyc

A giant isometric pixel-art map of New York City, inspired by SimCity 2000 and

Rollercoaster Tycoon. Andy Coenen fine-tuned an

Feb

19

Bell Canada

0:00

/0:30

1×

A fully AI generated commercial for the Canadian telecom giant Bell. Made in collaboration with

Feb

17

Oxen's Model Report

Welcome to this week's Oxen moooodel report. We know the AI space moves like crazy. There's

Feb

11

How a $1 Qwen3-VL Fine-Tune Beat Gemini 3

Can a $1 fine-tune beat a state-of-the-art closed-source model?

ModelAccuracyTime (98 samples)Cost/RunBase Qwen3-VL-8B54.1%~10 sec$0.003Gemini

Jan

28

How to Train a LTX-2 Character LoRA with Oxen.ai

LTX-2 is a video generation model, that not only can generation video frames, but audio as well. This model is

Nov

05

How to Use WAN 2.1-VACE to Generate Hollywood-Level Video Edits

Imagine you are shooting a film and you realize that you have the actor wearing the wrong jacket in a

Oct

26

How We Cut Inference Costs from $46K to $6.5K Fine-Tuning Qwen-Image-Edit

At Oxen.ai, we think a lot about what it takes to run high-quality inference at scale. It’s one

Oct

09

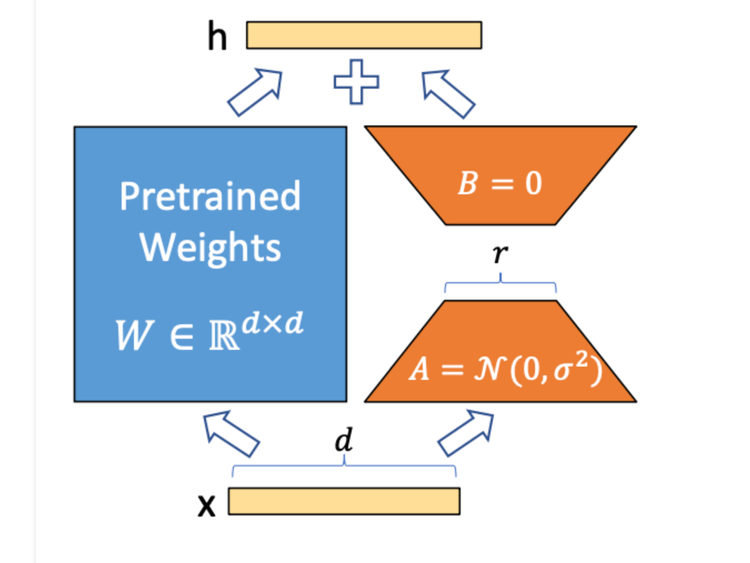

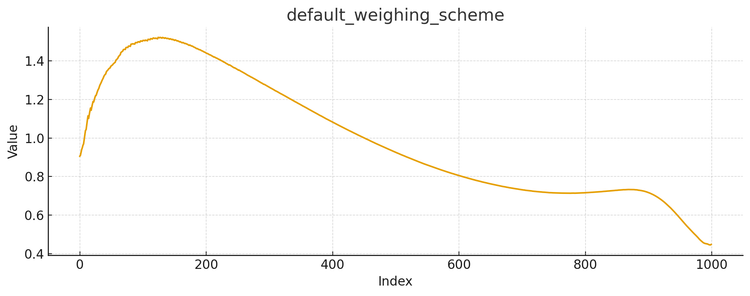

How to Set Noise Timesteps When Fine-Tuning Diffusion Models for Image Generation

Fine-tuning Diffusion Models such as Stable Diffusion, FLUX.1-dev, or Qwen-Image can give you a lot of bang for your

Sep

26

Fine-Tuned Qwen-Image-Edit vs Nano-Banana and FLUX Kontext Dev

Welcome back to Fine-Tuning Friday, where each week we try to put some models to the test and see if