🧠 GRPO VRAM Requirements For the GPU Poor

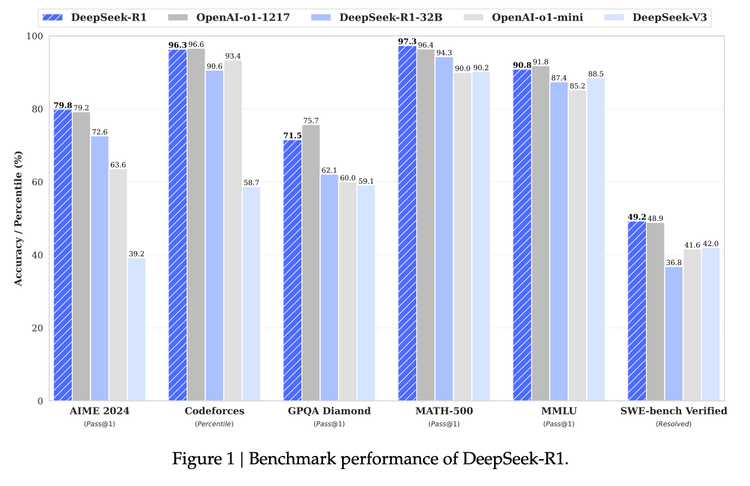

Since the release of DeepSeek-R1, Group Relative Policy Optimization (GRPO) has become the talk of the town for Reinforcement Learning

How DeepSeek R1, GRPO, and Previous DeepSeek Models Work

In January 2025, DeepSeek took a shot directly at OpenAI by releasing a suite of models that “Rival OpenAI’s

No Hype DeepSeek-R1 Reading List

DeepSeek-R1 is a big step forward in the open model ecosystem for AI with their latest model competing with OpenAI&

Oxen v0.25.0 Migration

Today we released oxen v0.25.0 🎉 which comes with a few performance optimizations, including how we traverse the Merkle

🌲 Merkle Tree VNodes

In this post we peel back some of the layers of Oxen.ai’s Merkle Tree and show how we

🌲 Merkle Tree 101

Intro

Merkle Trees are important data structures for ensuring integrity, deduplication, and verification of data at scale. They are used

arXiv Dive: RAGAS - Retrieval Augmented Generation Assessment

RAGAS is an evaluation framework for Retrieval Augmented Generation (RAG). A paper released by Exploding Gradients, AMPLYFI, and CardiffNLP. RAGAS

The Best AI Data Version Control Tools [2025]

Data is often seen as static. It's common to just dump your data into S3 buckets in tarballs

OpenCoder: The OPEN Cookbook For Top-Tier Code LLMs

Welcome to the last arXiv Dive of 2024! Every other week we have been diving into interesting research papers in

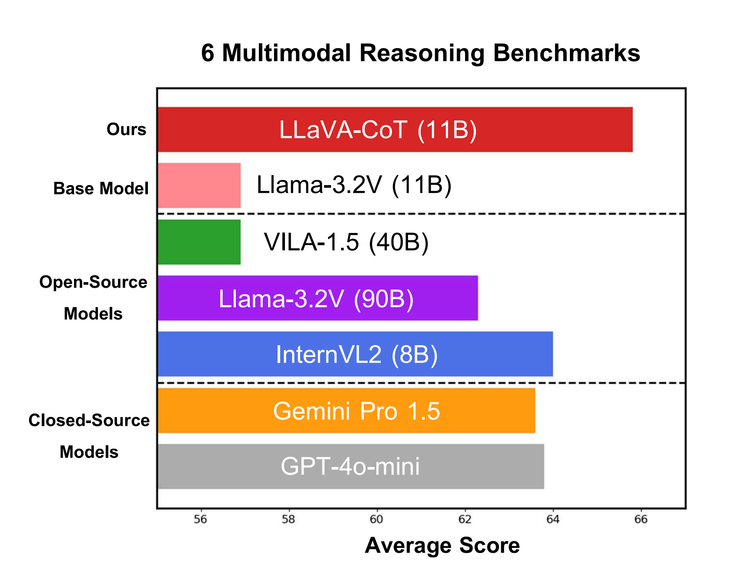

LLaVA-CoT: Let Vision Language Models Reason Step-By-Step

When it comes to large language models, it is still the early innings. Many of them still hallucinate, fail to

![The Best AI Data Version Control Tools [2025]](/content/images/size/w750/2024/12/ox-carrying-data.jpg)