How to train Mistral 7B as a "Self-Rewarding Language Model"

About a month ago we went over the "Self-Rewarding Language Models" paper by the team at Meta AI

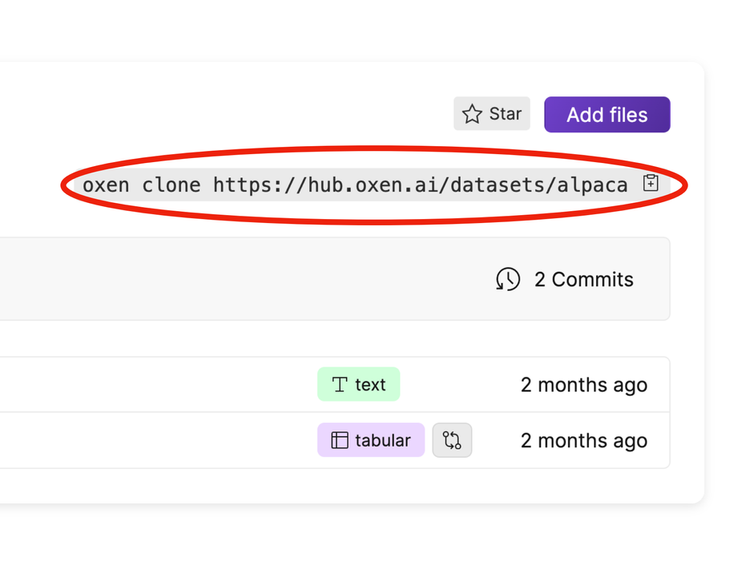

Downloading Datasets with Oxen.ai

Oxen.ai makes it quick and easy to download any version of your data wherever and whenever you need it.

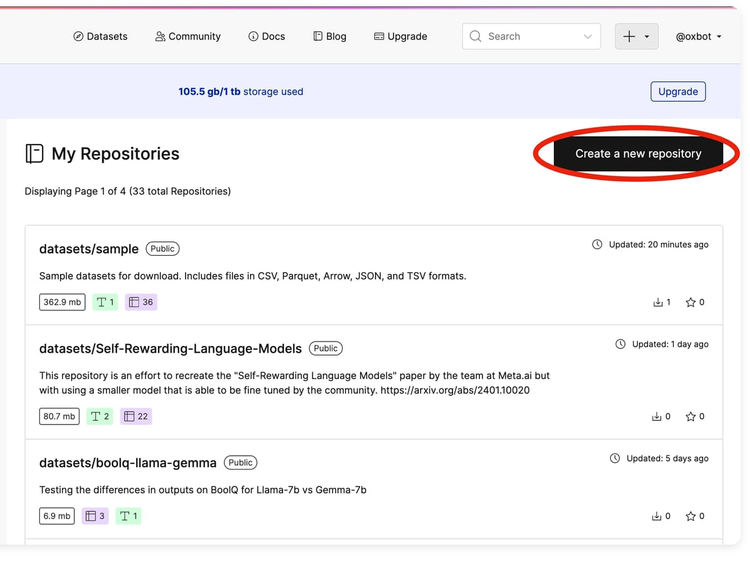

Uploading Datasets to Oxen.ai

Oxen.ai makes it quick and easy to upload your datasets, keep track of every version and share them with

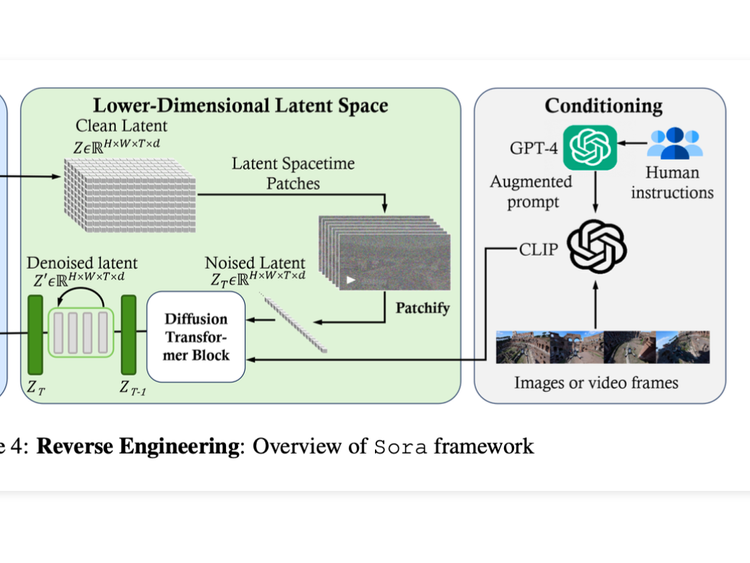

ArXiv Dives - Diffusion Transformers

Diffusion transformers achieve state-of-the-art quality generating images by replacing the commonly used U-Net backbone with a transformer that operates on

"Road to Sora" Paper Reading List

This post is an effort to put together a reading list for our Friday paper club called ArXiv Dives. Since

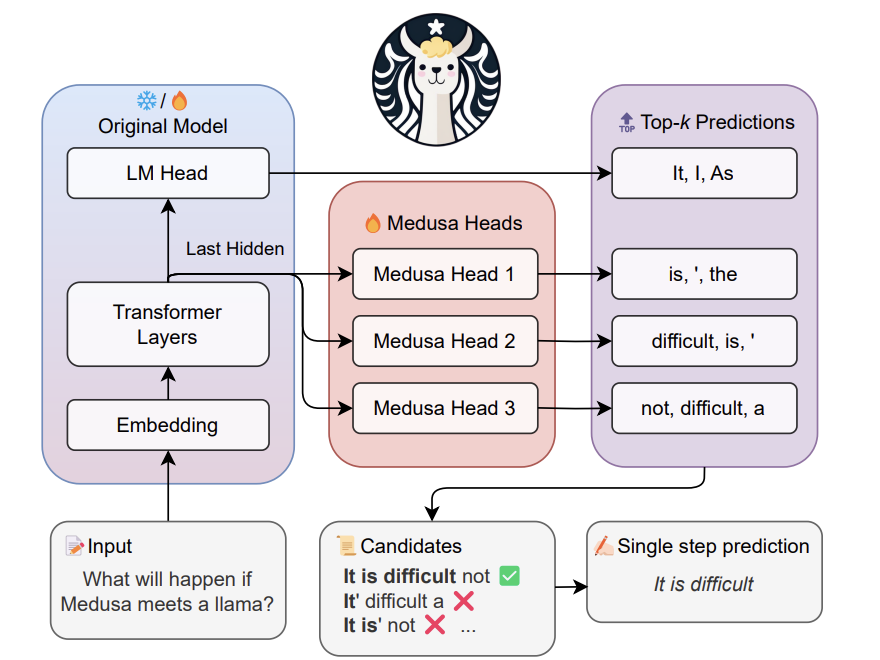

ArXiv Dives - Medusa

Abstract

In this paper, they present MEDUSA, an efficient method that augments LLM inference by adding extra decoding heads to

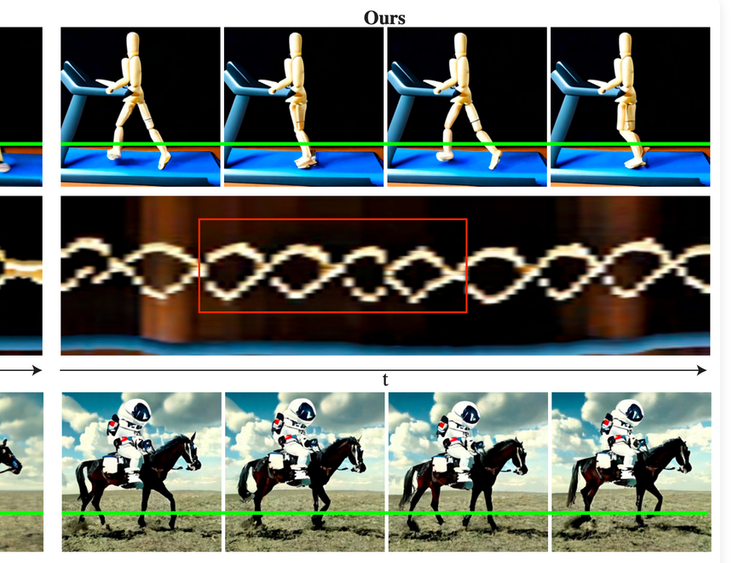

ArXiv Dives - Lumiere

This paper introduces Lumiere – a text-to-video diffusion model designed for synthesizing videos that portray realistic, diverse and coherent motion – a

ArXiv Dives - Depth Anything

This paper presents Depth Anything, a highly practical solution for robust monocular depth estimation. Depth estimation traditionally requires extra hardware

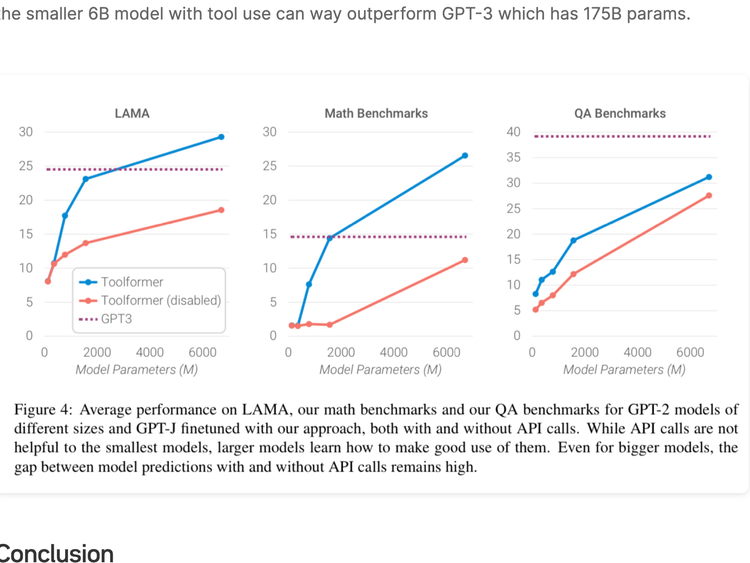

Arxiv Dives - Toolformer: Language models can teach themselves to use tools

Large Language Models (LLMs) show remarkable capabilities to solve new tasks from a few textual instructions, but they also paradoxically

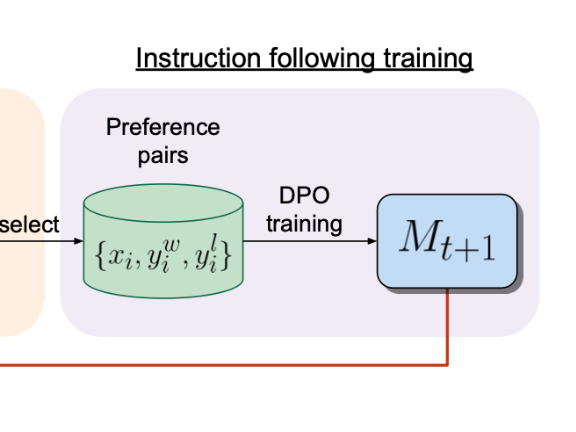

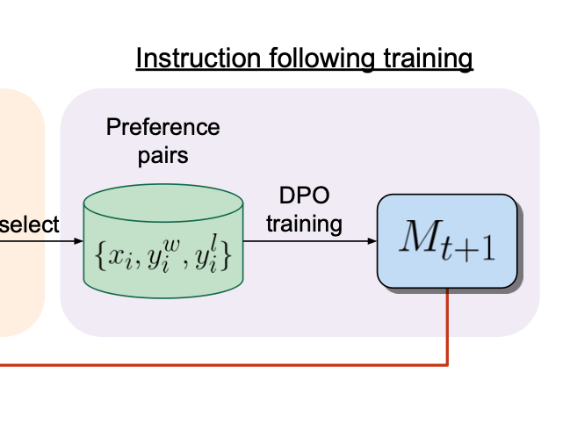

Arxiv Dives - Self-Rewarding Language Models

The goal of this paper is to see if we can create a self-improving feedback loop to achieve “superhuman agents”