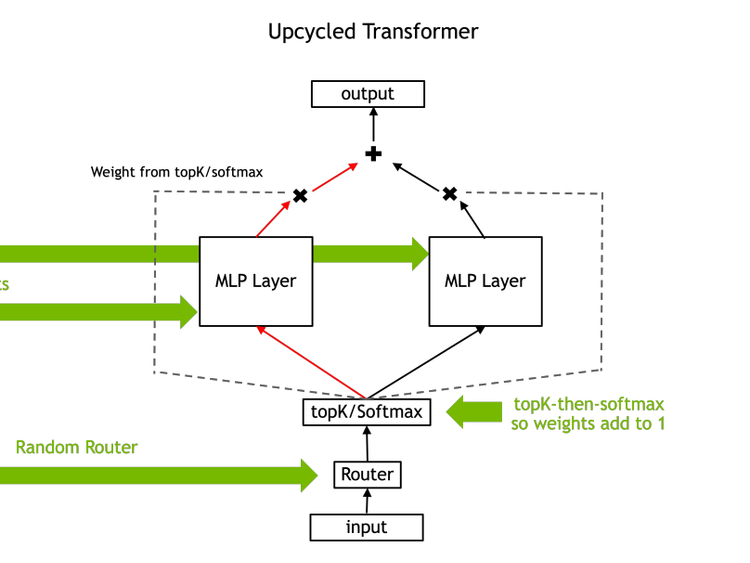

How Upcycling MoEs Beat Dense LLMs

In this Arxiv Dive, Nvidia researcher, Ethan He, presents his co-authored work Upcycling LLMs in Mixture of Experts (MoE). He

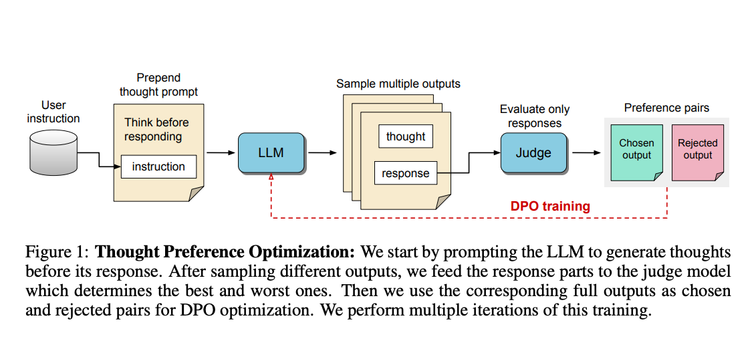

Thinking LLMs: General Instruction Following with Thought Generation

The release of OpenAI-O1 has motivated a lot of people to think deeply about…thoughts 💭. Thinking before you speak is

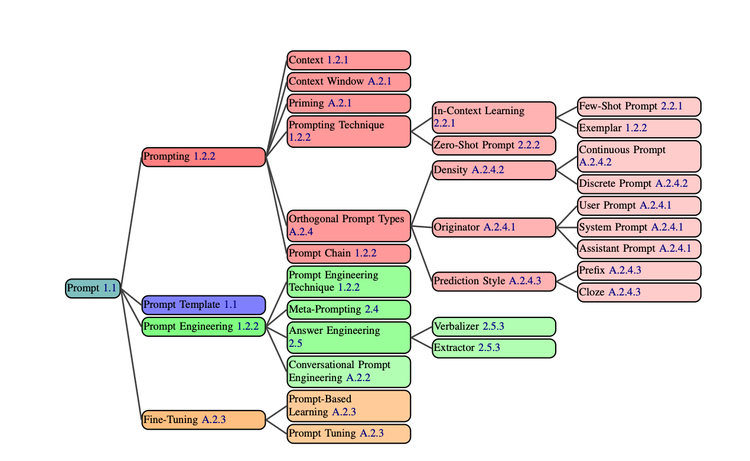

The Prompt Report Part 2: Plan and Solve, Tree of Thought, and Decomposition Prompting

In the last blog, we went over prompting techniques 1-3 of The Prompt Report. This arXiv Dive, we were lucky

The Prompt Report Part 1: A Systematic Survey of Prompting Techniques

For this blog we are switching it up a bit. In past Arxiv Dives, we have gone deep into the

arXiv Dive: How Flux and Rectified Flow Transformers Work

Flux made quite a splash with its release on August 1st, 2024 as the new state of the art generative

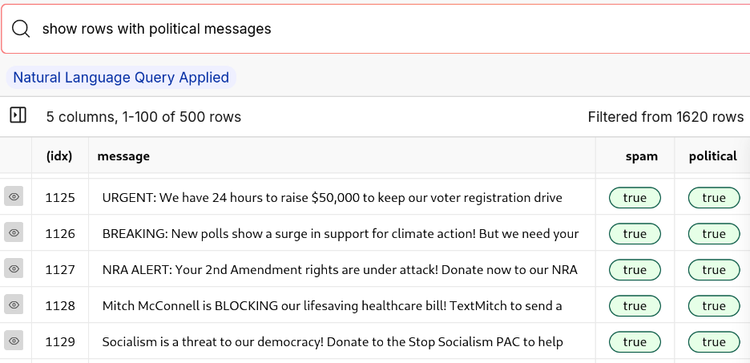

How Well Can Llama 3.1 8B Detect Political Spam? [4/4]

It only took about 11 minutes to fine-tuned Llama 3.1 8B on our political spam synthetic dataset using ReFT.

Fine-Tuning Llama 3.1 8B in Under 12 Minutes [3/4]

Meta has recently released Llama 3.1, including their 405 billion parameter model which is the most capable open model

arXiv Dive: How Meta Trained Llama 3.1

Llama 3.1 is a set of Open Weights Foundation models released by Meta, which marks the first time an

How to De-duplicate and Clean Synthetic Data [2/4]

Synthetic data has shown promising results for training and fine tuning large models, such as Llama 3.1 and the

Create Your Own Synthetic Data With Only 5 Political Spam Texts [1/4]

With the 2024 elections coming up, spam and political texts are more prevalent than ever as political campaigns increasingly turn

![How Well Can Llama 3.1 8B Detect Political Spam? [4/4]](/content/images/size/w750/2024/09/DALL-E-2024-09-13-20.39.28---A-stylized--futuristic-llama-holding-an-American-flag-in-its-mouth--with-sharp--bold-lines-and-glowing--laser-like-eyes.-The-llama-has-a-sleek-fur-tex-1.webp)

![Fine-Tuning Llama 3.1 8B in Under 12 Minutes [3/4]](/content/images/size/w750/2024/09/DALL-E-2024-09-03-11.22.45---A-stylized-llama-with-vibrant-red-and-blue-fur--shooting-laser-beams-from-its-eyes.-The-llama-s-eyes-glow-intensely--with-one-eye-emitting-a-red-laser-copy.jpg)

![How to De-duplicate and Clean Synthetic Data [2/4]](/content/images/size/w750/2024/08/blog-copy.jpg)